How Much Is It To Create A Snapchat Filter

How to create SnapChat lenses using pix2pix

We all love SnapChat lenses/filters, but ever wondered how you can make your own?

![]()

Yes, I know subtitle is from my previous article where I showed how to make SnapChat like live filters using dlib and openCV. But today I wanted to show how we can create the same filters using a Deep Learning network called pix2pix. This approach helps to eliminate manual feature engineering step from the previous approach and can directly output target image with just one inference from the neural network. So, let's get started.

What is pix2pix?

It is published in paper Image-to-Image Translation with Conditional Adversarial Networks (Commonly known as pix2pix).

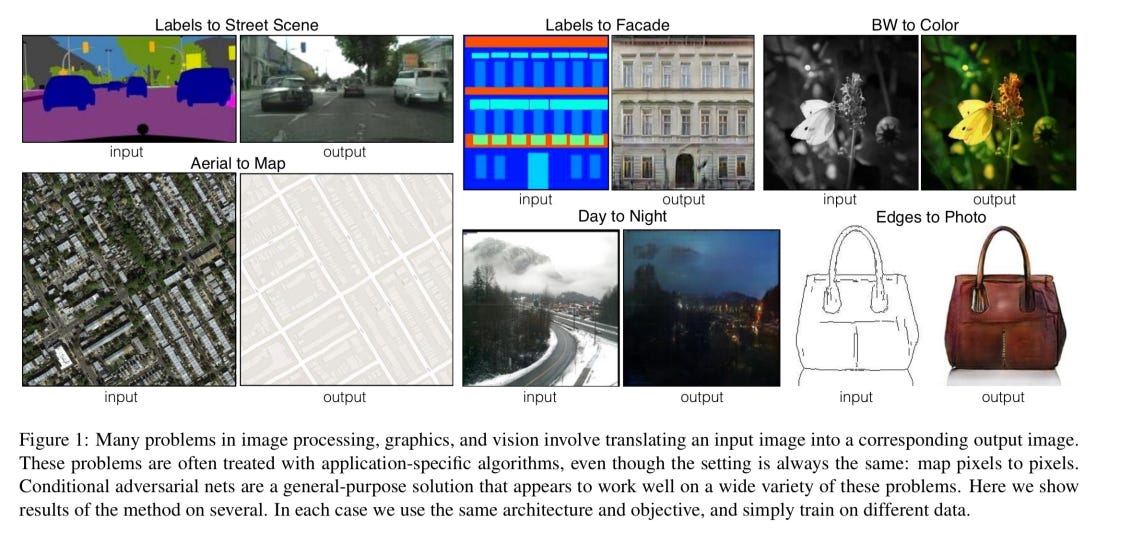

The name 'p i x2pix' comes from the fact that the network is trained to map from input pixels to output pixels, where the output is some translation of the input. You can see some examples in the image below,

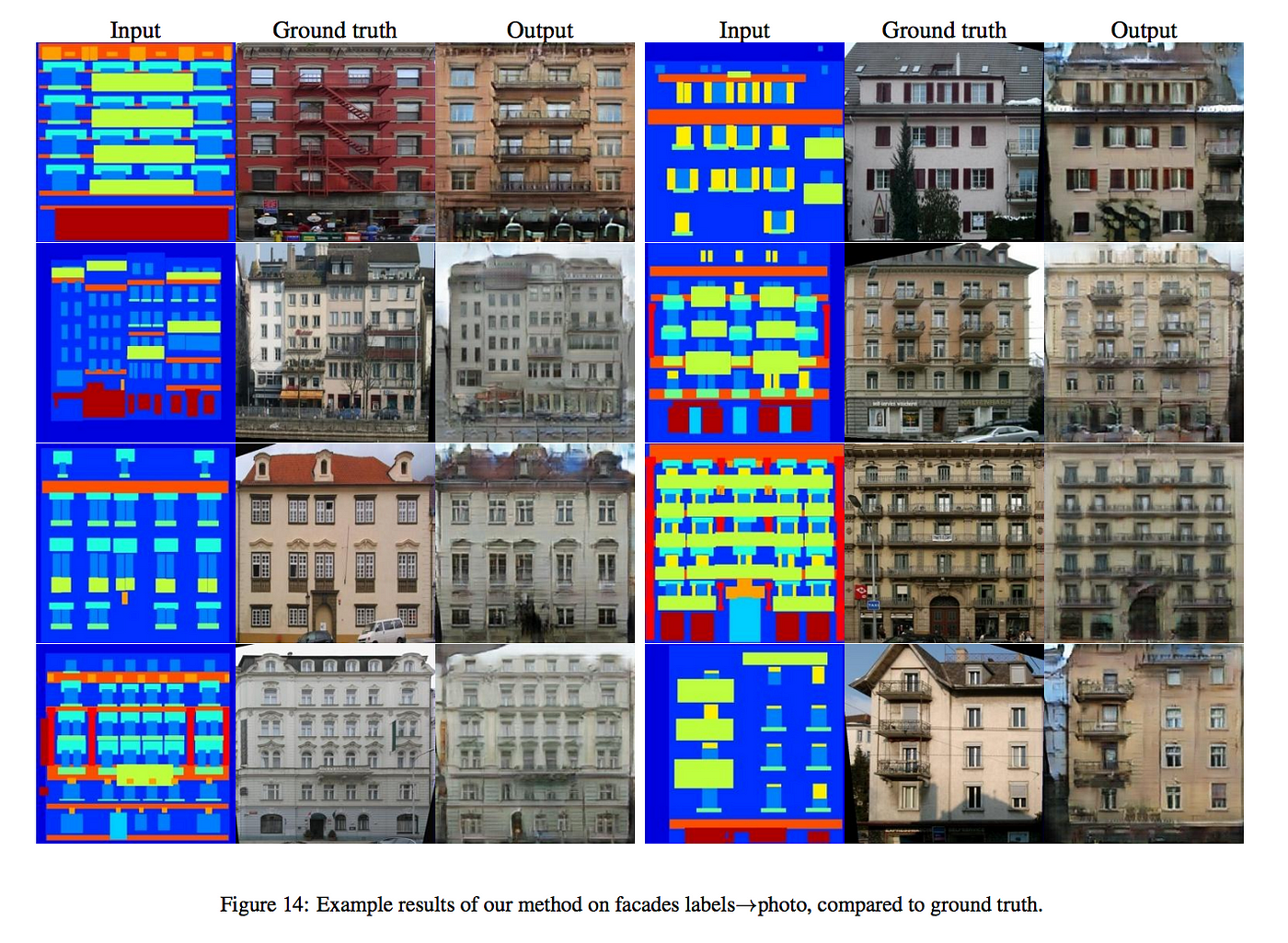

The really fascinating part about pix2pix is that it is a general-purpose image-to-image translation. Instead of designing custom networks for each of the tasks above, it's the same model handling all of them — just trained on different datasets for each task. Pix2pix can produce effective results with way fewer training images, and much less training time. Given a training set of just 400 (facade, image) pairs, and less than two hours of training on a single Pascal Titan X GPU, pix2pix can do this:

How pix2pix Network works?

pix2pix network uses 2 networks to train.

- Generator

- Discriminator

Here, Generator will try to generate output image from the input training data and Discriminator will try to identify whether the output is fake or real. So, both networks will try to increase their accuracy which will eventually result into a really good Generator. So, you can think the basic structure of the network as encoder-decoder.

For example, the generator is fed an actual image(input) that we want to "translate" into another structurally similar image(real output). Our generator now produces fake output, which we want to become indistinguishable from real output. So, we simply collect lot of examples of 'the real thing' and ask the network to generate images that can't be distinguished from them.

Approach

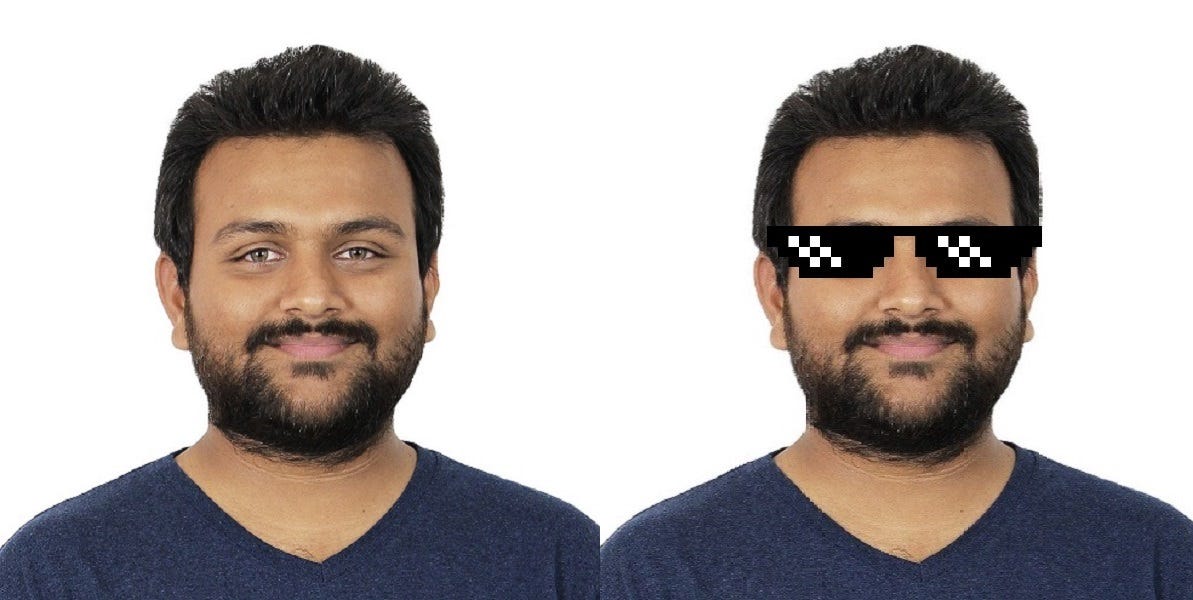

Today we will use above mentioned model to create a SnapChat filter. I will use image below to show the results. All the images are 256x256 because I trained on the same size (Yes, I don't have enough GPU power to train HD)

Now, let's gather training data! For that I will use my previous tutorial code . I downloaded bunch of face images data sets and also used google to download face images.

I applied "Thug Life Glasses" to all the images and placed them side by side as training data requires that format. I am using following pix2pix training repository which uses tensorflow to train and show results.

Once you finish with generating training data it will look like below:

Now we will start training. For training we should use following command inside the pix2pix-tensorflow repository

python pix2pix.py --mode train --output_dir dir_to_save_checkpoint --max_epochs 200 --input_dir dir_with_training_data --which_direction AtoB

Here, AtoB defines which side to train the model. For example, in above image AtoB means model will learn to convert normal face to face with glasses.

You can see the results on the training data and the graphs in the tensorboard which you can start by following command:

tensorboard --logdir=dir_to_save_checkpoint Once you start seeing decent results for you model stop the training and use evaluation data to check real-time performance. You can start training from the last checkpoint if you think results are not good enough for the real-time performance.

python pix2pix.py --mode train --output_dir dir_to_save_checkpoint --max_epochs 200 --input_dir dir_with_training_data --which_direction AtoB --checkpoint dir_of_saved_checkpoint Conclusion

Conditional adversarial networks are a promising approach for many image-to-image translation tasks. You need to train properly and please use a good GPU for it as I am getting following output with less training and not much training data variance.

How Much Is It To Create A Snapchat Filter

Source: https://towardsdatascience.com/using-pix2pix-to-create-snapchat-lenses-e9520f17bad1

Posted by: drinnonhused1980.blogspot.com

0 Response to "How Much Is It To Create A Snapchat Filter"

Post a Comment